Agentic AI

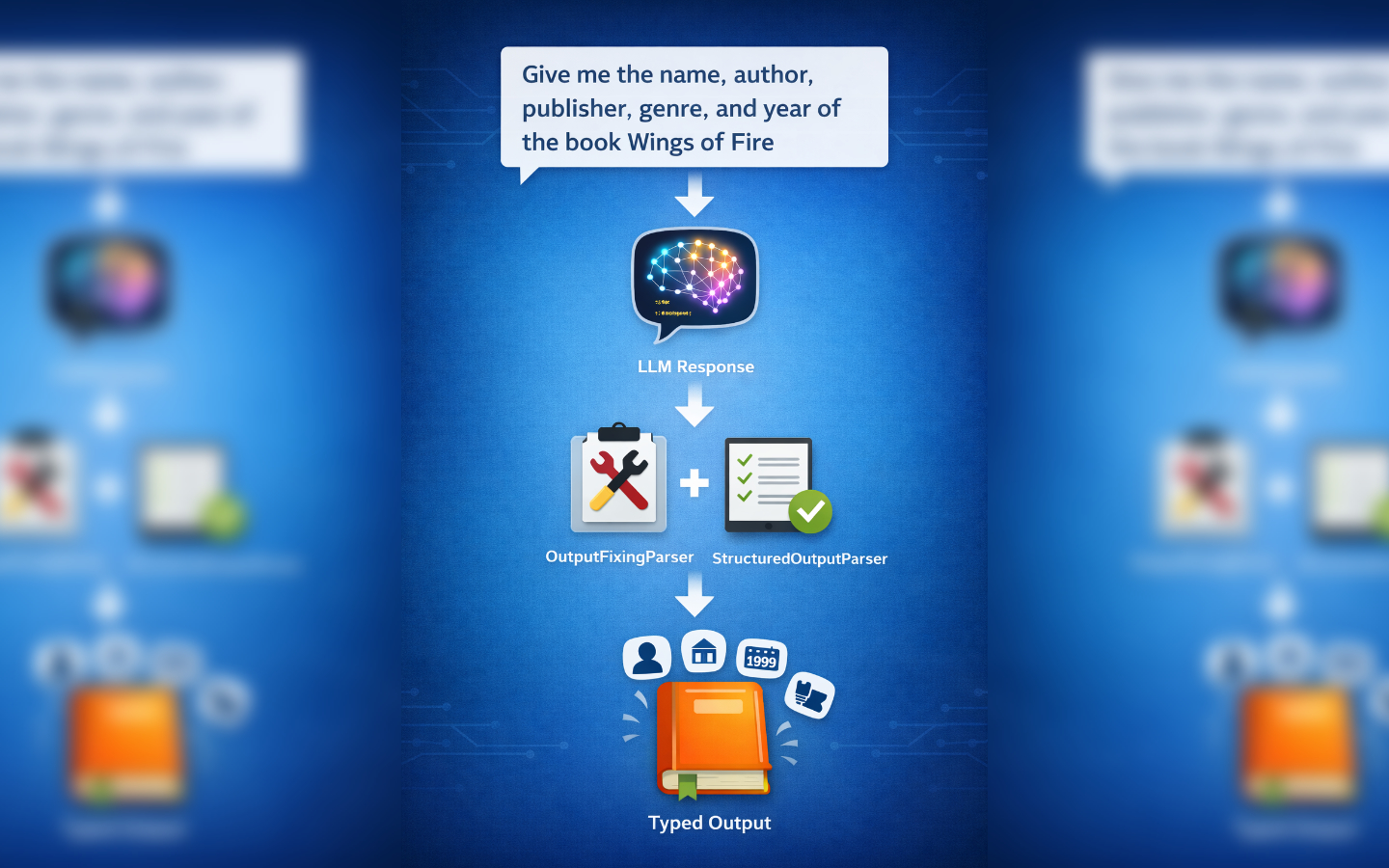

Structured Output in LangGraph

Large language models are incredibly versatile, but when your code depends on predictable data structures, free‑form text can be a headache. The same information can be expressed in countless ways, making downstream processing error-prone. Structured output bridges this gap: by defining a schema, …

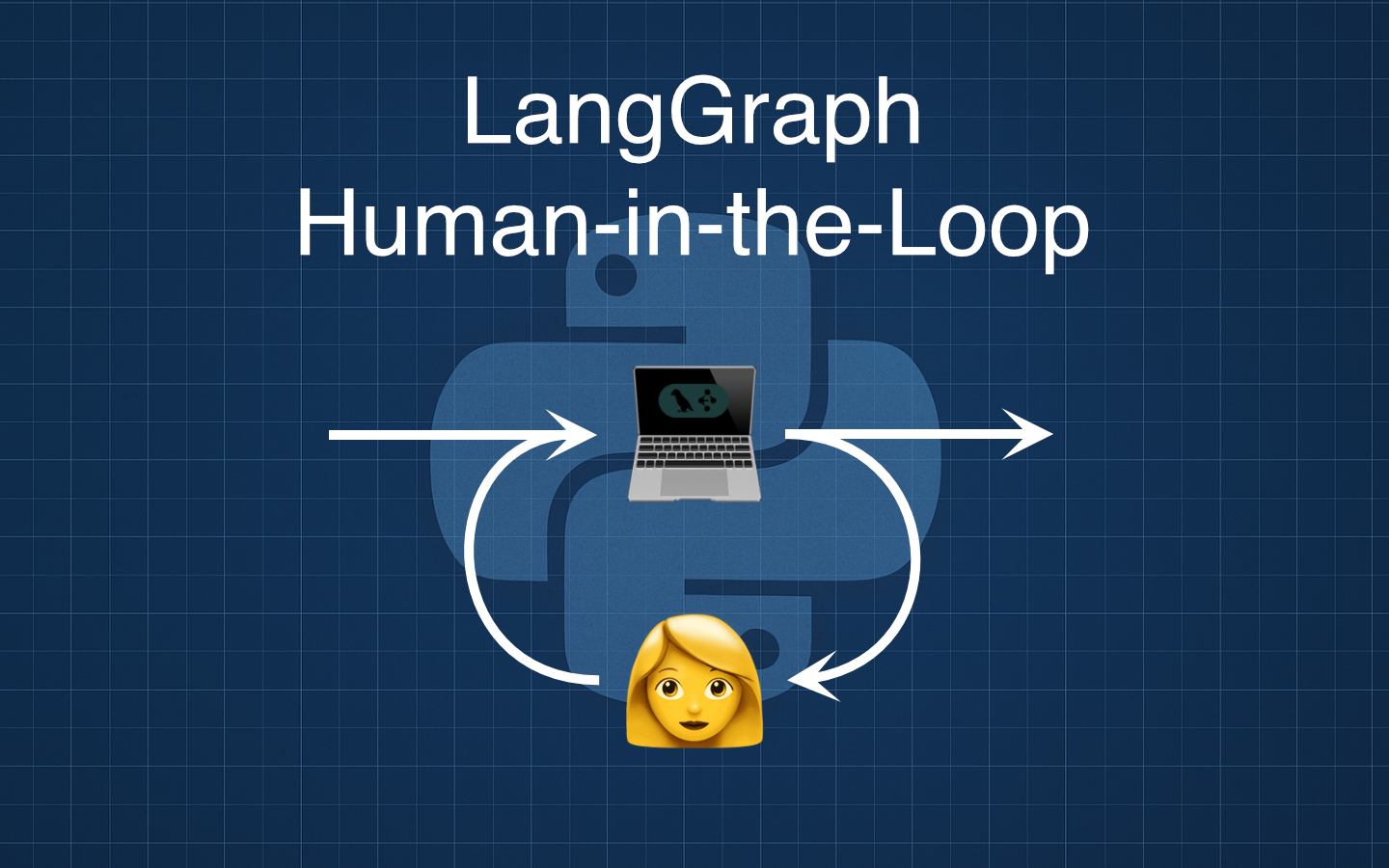

Pausing for Human Feedback in LangGraph

Adding a human-in-the-loop step to a LangGraph flow is an easy way to improve quality and control without adding branching or complexity. In this post we will build a tiny three-node graph that drafts copy with an LLM, pauses for human feedback, and then revises the draft, using LangGraph’s …

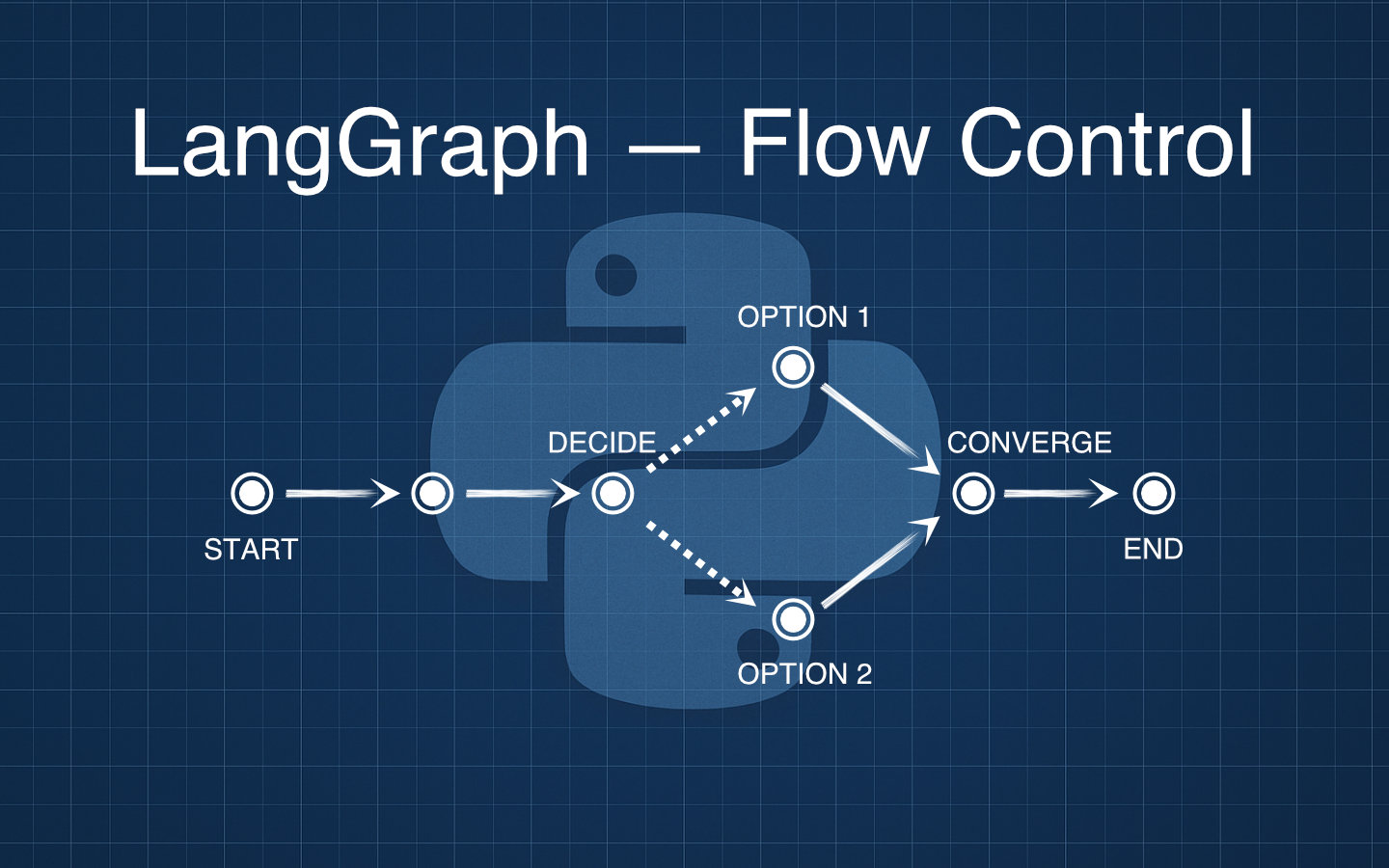

Controlling flow with conditional edges in LangGraph

Conditional edges let your LangGraph apps make decisions mid-flow, so today we will branch our simple joke generator to pick a pun or a one-liner while keeping wrap_presentation exactly as it was.

In the previous post we built a two-node graph with joke_writer and wrap_presentation, and now we will …

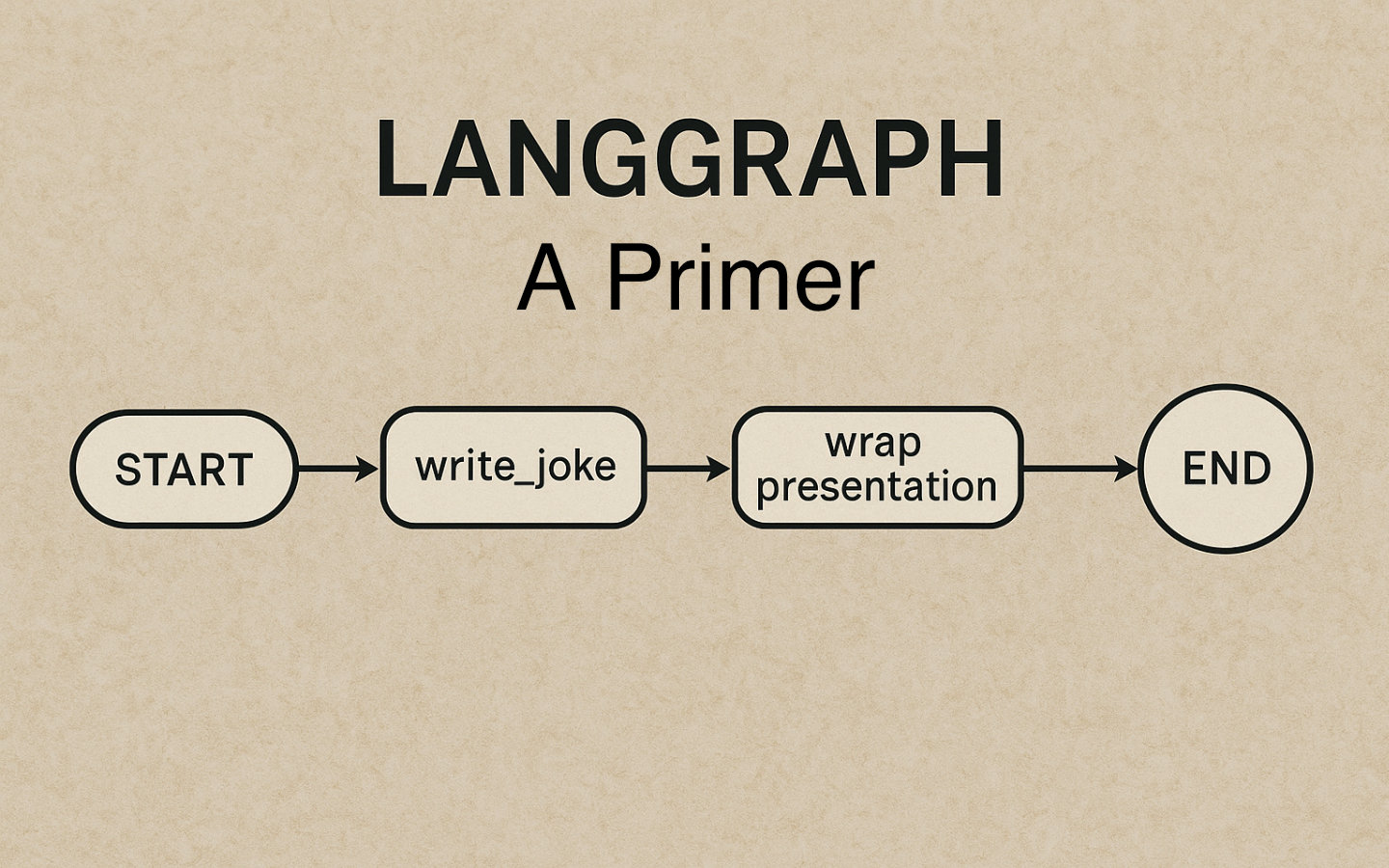

A Primer in LangGraph

LangGraph makes it easy to wire simple, reliable LLM workflows as graphs, and in this post we will build a tiny two‑node graph that turns a topic into a joke and then formats it as a mini conversation ready to display or send.

By the end, you will have a minimal Python project with a typed JokeState …

Using Agentic AI to Get the Most from LLMs

Agentic AI represents a generational leap forward in how artificial intelligence systems operate, moving beyond single, monolithic models to autonomous, goal-oriented agents.

If you missed it, my previous article on how LLMs work under the hood lays the foundation for how large language models …